Contents

- Is the Google Webspam Team Too Draconian?

- Google Says You Can Have More Than 100 Links Per Page

- Are Google Sitemaps Important for SEO?

- Why Googlebot Stopped Coming To My Site And How I Got It Back

- How I Blocked Googlebot

- Webmasters Are Hacking Sites to Game the Search Engines

- Google Is Only Human – Error Leads to Every Website Declared as Harmful

- How Ads Can Screw Up Your SEO: Bloomberg.com Example

- SEO Tip: Sitemap Protocol using robots.txt

This page lists various posts that are specifically about SEO for Google, which really means, advice from Google, or advice from me about Google. I have learnt a few things over the years, and made several mistakes, so have documented here. Newest posts first.

Is the Google Webspam Team Too Draconian?

Jan 4, 2012

“Even though the intent of the campaign was to get people to watch videos–not link to Google–and even though we only found a single sponsored post that actually linked to Google’s Chrome page and passed PageRank, that’s still a violation of our quality guidelines,” – Matt Cutts on Google+

This led to a 60 day penalty. It still shocks me that Google will penalise a website even though it knows that there was no intention to game the system. People make mistakes. Someone could spend years raising awareness about a product or service and then 1 poorly constructed bit of code could lose them 60 days of business (at least).

The argument for this seems to be that the client (in this case Google’s own Chrome) can ask for a reinclusion request once they have cleared up the problem. However, reinclusion requests are only possible when you know why you had the penalty. Unfortunately when you get a penalty the webmaster rarely knows why it has been applied.

At least I did not when I had a big problem in 2010. I received a penalty and only by a chance conversation with the Adwords team did I find out the cause of my problem, which then allowed me to set about fixing it.

Full transparency would surely be when Google send webmasters the details of the violation so that they can then act to resolve the problems.

I am all for stomping out the link buyers (I see enough of them taking my business!) but what I do not understand is why Google cannot just devalue the bad links and let the good work still stand. Maybe the fear of a penalty is what stops some people from buying links. But in this case they were just buying advertising that flowed page rank (in error).

Google Says You Can Have More Than 100 Links Per Page

Mar 5, 2011

Google once provided a guideline that said you should not have more than 100 links on a page. Many webmasters still strictly follow this rule.

However, Matt Cutts explained in January 2011 that this advice is about 8 years old, and Google have now actually removed this rule from their guidelines. The rule was initially set as in the early days of Google only a part of a page would be crawled, it was limtied to about 100kb. That is no longer the case.

But, for SEO purposes it may be better than most pages do have fewer links as this can concentrate (or funnel) pagerank.

Are Google Sitemaps Important for SEO?

Oct 10, 2010

Yes.

OK, I probably need to elaborate.

A few years ago some SEO’s I was speaking to felt that a Google sitemap, i.e. placing a sitemap.xml on your site and linking to it in Google Webmaster Tools, was not really a requirement. It does not help your SEO.

This may of course still be the case, but is it worth not bothering, considering how easy it is to put on there?

Recently I got a little insight into how much Google does use a sitemap. It relates to the little mishap I had the other day when I accidentally blocked googlebot (see below).

According to my Google Webmaster Tools at the moment there are may errors from between 2nd and 4th October, all 403 errors that Googlebot encountered when trying to visit my site. The distribution of errors is interesting.

- HTTP (1,681)

- In Sitemaps (367)

This means that Googlebot hit 1,681 errors while crawling my site via links on the page (internal links). It also encountered 367 errors while trawling the sitemap.

Now the sitemap as a lot more than 367 entries, so I do not know why it only crawled 367 pages. It could be because Googlebot does not look too deep into the sitemap.xml file, or it only takes a certainly percentage of the pages. Or it could be because it abandons its attempt after do many failures. But what it does show is that Google does look at those sitemaps to have an extra insight into what is on your website.

Internal Links Are King – Or Don’t Make Google Hunt

Many people still stick to the “content is king” rule for SEO, and this is certainly still the case. But if you want you whole website crawled by Google, you have to also follow the “Don’t Make Me Think” (that’s a popular usability book by Steve Krug) approach, or more specifically “Don’t Make Google Hunt”.

To do this you need to ensure that all of your web pages are easily found by googlebot. Really, the same rules apply to googlebot as a human visitor. If your website is well planned, with good navigation, then it should be possible for a visitor to find your content within a few clicks. This is the guideline giving by Google.

So, do not leave pages buried on your website, with people (and googlebot) having to follow a long trail before they can find the page. Ideally all pages should be accessible from 3 clicks from the home page. A good example would be:

- Click main index

- Click section index

- Click page link

Or:

- Click main index

- Click section index

- Click a general page

- Click page link within this

For a small site this is easy to achieve, but once you have over 1000 pages then navigation becomes a bigger issue.

You need to be able to ensure that people can find your content without giving them too many options at once. It can be tempting to create massive index pages to provide all 1000 links, but this does not address the usability issues. Have ever tried to find something by searching through a list of 1000 items?

If you know there is something there you want to read, then this is possible. But if you are browsing, then it is unlikely you will go far beyond the first page (“above the fold”) before getting bored and visiting a better website.

So the trick is to order your content in bite sized chunks and provide as many useful sub-categories as possible without flooding your readers with too many options.

Why Googlebot Stopped Coming To My Site And How I Got It Back

Oct 5, 2010

OK, today was one of the most worrying days for me so far. This is how the day panned out.

This morning I was reviewing a website in the Google index as new pages were not being indexed. For the site in question new pages are generally crawled and indexed in about 10 minutes, sometimes less, sometimes more. The Google cache for the home page and main internal pages is also updated most days.

So today I noticed that the cache had not been updated for 3 days. This was unusual. So, what did I do? Rather than just say what I did, I will write it as a task list should you find yourself in this situation. (edit – this all went wrong, started talking about what happened again below, bear with me, I am not going to rewrite it properly…).

1. Check Google Webmaster Tools

First check Site Configuration > Crawler Access. Can Google access your robots.txt, or is it blank? (mine was blank).

If blank, test again, if fails, find out why. Go to Labs > Fetch as Googlebot. Attempt to Fetch your homepage first. If you can fetch it, then Google can crawl you, but has chosen not to. This is a different problem. In my case Google returned a 403 error: HTTP/1.1 403 Forbidden.

In SEO terms this is known as a fucking disaster.Why could Googlebot not access my site?

2. Check Your IP Block List

Now, I run the site in question on WordPress and have WP Firewall installed (created by SEO Egghead) that alerts me of possible threats, generally when someone attempts to access part of the site that they should not as they are sniffing around for vulnerabilities. When I get these reports I always check the IP address and then block them in .htaccess if they are a bit suspect.

Although, it is possible that on the 2nd October I failed to check this one sufficiently:

WordPress Firewall has detected and blocked a potential attack!

Web Page: /news.php?page=../../../../../../../../../../../../../../../../../../../proc/self/environ

Warning: URL may contain dangerous content!Offending IP: 66.249.66.137 [ Get IP location ] Offending Parameter: page = ../../../../../../../../../../../../../../../../../../../proc/self/environ

Because on checking the IP address, 66.249.66.137 is Google, Mountainview. Ooops. I blocked Google, what a twat.

How did I find out? The long and hard way. I removed all the IP addresses that I had blocked in the last week and then added them back a few at a time and ran the Labs > Fetch as Googlebot again until I found out which IP was being blocked.

So, good news! I found the problem. Bad news, Google was dropping me from the index like I was a bit of shit on its shovel.

Google Dropping Pages from the Index

I checked several pages in the morning, and by the time I had discovered the problem many of these were out of the index already, or at least shoved into the “supplemental index”, meaning that they were there for a site:url search but did not appear for any keywords, not even unique quotes from the page.

OK, so Googlebot was allowed back in the site, but it pages were still being dropped.

Back to Google Webmaster Tools

I double checked in Site Configuration > Crawler Access that Google could access the site. It could. Tick.

Then I check the XML sitemaps, clicked to Resubmit on all of them. For sitemaps I have:

- /sitemap.xml

- /feed

- /comments/feed

The feeds were only added today (as suggested by Google in Webmaster tools).

Send a Reinclusion Request

This may seem extreme, but Google reinclusion / reconsideration requests are not just for when your site is flagged as spam or containing Malware. It can be used also when you just want to tell Google that you think something has upset the index and you would like it reviewed. They actually say:

“If your site isn’t appearing in Google search results, or it’s performing more poorly than it once did.”

So a site dropping out as a result of you (me) blocking an IP address accidentally falls into that category.

Add Some New Content and Update Some Articles

Next thing I did was deactivate my WordPress Ping controller. WordPress by default pings websites whenever you publish or edit an article. As I do a lot of editing, I deactivated pinging on edit using MaxBlogPress Ping Optimizer.

I edited about 5 articles, republishing for today’s date, so that they appear on on the front page and top of the RSS feeds. Also they appear as new on the xml sitemap, although the URLs all remain the same. This is not something you should really do all the time, but handy to send out a wake up call to the bots.

Then I published a couple of new articles just to give the ping services and Google an extra kick.

Check Access Logs

Next I checked the access logs and thankfully I could see that Google did start crawling the site again. What you need to look for on an access log is something like (or identical to): Agent: Mozilla/5.0 (compatible; Googlebot/2.1; +http://google.com/bot.html)

This is the Googlebot (according to a forum I found earlier today) that does a deeper crawl of the site. This is a good sign. But just checking the logs for “googlebot” should pick up any googlebot.

For example Agent: portalmmm/2.0 N500iS(c20;TB) (compatible; Mediapartners-Google/2.1; +http://google.com/bot.html) is the bot that Adwords / Adsense uses to determine the content of your site. Not sure if that one is Adsense (for displaying contextual adverts) or Adwords (for calculating quality score) but either way good if they are looking. Note that both of the Googlebots come from IP 66.249.66.137.

So in addition to actually blocking Google from crawling and indexing my site I was blocking Adwords bots from visiting, which probably also explained by eCPM crashed yesterday. And that was before the major traffic crash.

Yesterday was the worse Monday I have experienced on this site (not *this* site, but the site I am talking about….) in a long time. I originally thought that I was still suffering as a result of the recent site restructure, which saw me move around over 1000 articles, many articles having well over 1000 words (the biggest having 41,000 words) and many being ranked No.1. in Google for their keywords.

Looking To The Future

The good news is that pages are being re-indexed now. This afternoon at the height of the disaster even the homepage was not showing for the domain name or brand name – instead content thieves and brand thieves were showing on top of the SERPs.

But the home page is back, the Google sitelinks are back (with new ones to boot) and some of the major keywords are back too.

Overall the site is still suffering, a combination of a major restructure and then blocking Google has caused untold damage, but hopefully time will heal.

So, What Actually Cured the Problem?

Whenver testing things, you should do one thing at a time. But I did not want to wait for this, so just charged in doing everything possible to resolve the problem. Any one of these may have been enough to fix the problem, or it may have been a combination;

- Unblocking the IP address. This is the only sure thing that started getting the site back in the index.

- Adding the feeds to the sitemaps

- Resubmitting the sitemap

- Testing the robots.txt

- Republishing articles and pinging

- Writing new content

- Sending a reinclusion / reconsideration request

Lessons for the Day

- Never block an IP address unless you are 100% sure it is not a good bot. Firewalls give false positives, although still no idea why Google searched for that URL (it does not actually exist on the site).

- If the shit hits the fan take a deep breath and analyse the situation. Look at the logs. Know your logs. Until today I did not know how often Googlebot visits my site. Webmaster tools is always a few days out of date, so it looks healthy as the latest report is 2nd. I will probably see the problem in Webmaster tools tomorrow.

- Keep a log of changes so that you can quickly reverse things. Since I started the site restructure about 6 weeks ago I have kept a log of all (almost all) changes. The spreadsheet is now about 500 rows long.

- DO NOT PANIC.

How I Blocked Googlebot

Oct 5, 2010

OK, things have not been going too great since the restructure, but I have been ploughing on all the same. Yesterday was the worse Monday in several years, a massive step back.

I took a look at the SERPs I noticed that my pages had not been updated in Google for a few days, generally updates happen every day. Also I have lost a lot of very old keywords, where I was no.1 for years, now nowhere at all.

I took a loot at Webmaster Tools, and did a Fetch as Googlebot. Got a 403 Error. Huh?

Now, when I get firewall reports I generally block the IP. Usually such things come from Brazil, Poland, far eastern places etc.

On 2nd October I got this one:

Web Page: /news.php?page=../../../../../../../../../../../../../../../../../../../proc/self/environ

Warning: URL may contain dangerous content!

Offending IP: 66.249.66.137 [ Get IP location ]

Offending Parameter: page = ../../../../../../../../../../../../../../../../../../../proc/self/environ

My belief was than anything that sends such as request is sniffing around for weaknesses to hack. I admit I usually check the IP before blocking but obviously did not on this day. That IP is Google, Mountain View.

This morning I deleted out all the IPs that I had blocked in the last week and started replacing them and trying to fetch as googlebot until it broke again. That IP broke it.

Why did Google look for a page that does not exist?

Hopefully things will improve again, but I seem to have managed to upset Google yet again.

If any Googlers could check things their end and tell me if it is s minor set back or a major upset, that would be lovely.

This morning I started to think that it was time to pack it all in and go back to Banking. Not happy days!

Webmasters Are Hacking Sites to Game the Search Engines

Jul 30, 2009

Matt Cutts, Google’s spokesperson for its search engine spam team, has announced that there are more and more cases of cheeky black hat SEOers hacking into flaky websites to place their links, to game the Google search engine.

As everyone knows, gaining quality links are essential if you want your website to rank well in Google Search. However, due to the fact that there has been a major Link Rush in recent years, people are generally unlikely to link out to other websites now. People fear linking out, thinking that they will lose some of their “link juice” to other sites. Also, many webmasters receives hundreds of requests to link to other sites each week by SEO outsource companies (or webmasters with a lot of time on their hands) and are generally of the opinion that link request cold callers should be treated in the same way as windows and conservatory salesmen. That is, ignored.

“As operating systems become more secure and users become savvier in protecting their home machines, I would expect the hacking to shift to poorly secured Web servers … this trend will continue until webmasters and website owners take precautions to secure Web-server software as well.” Matt Cutts, Googler.

Really, black hat SEOers have been using dodgy methods for years to win links. So why is Google only now announcing that there is a rise in this happening? Could it be a warning? Maybe, if we read between the lines, this is what Matt Cutts is really saying:

“We know how you game the search engines. We know how to spot the vulnerabilities in websites. We can tell the difference between an authorised link and a hacked link. Stop now, or be punished!”

Google Is Only Human – Error Leads to Every Website Declared as Harmful

Feb 1, 2009

Yesterday a strange thing happened on the internet – well on Google, but for many, Google *is* the internet. All searches made on the Google search engine were labelled as “This site may harm your computer”. Google kindly places a warning on search results whenever a site appears that has been known to distribute harmful software (i.e. viruses, trojans, spyware etc). This is a great service, as it greatly reduces the number of successful hacking/theft attempts online.

However, yesterday, for a short while, every single result was given a warning label. At first, we thought that Google had gone mad, and decided that the internet was evil after all, and following their “Do Not Evil” mantra, decided to declare all of it so. But no, Google had not gone mad, there was just a slight slip up in implementing the latest datafeed into the dodgy site database. Easily done, human error happens in all companies, and they have fully explained what went wrong. See their explanation here, from Marissa Mayer, VP, Search Products & User Experience:

If you did a Google search between 6:30 a.m. PST and 7:25 a.m. PST this morning, you likely saw that the message “This site may harm your computer” accompanied each and every search result. This was clearly an error, and we are very sorry for the inconvenience caused to our users.

What happened? Very simply, human error. Google flags search results with the message “This site may harm your computer” if the site is known to install malicious software in the background or otherwise surreptitiously. We do this to protect our users against visiting sites that could harm their computers. We maintain a list of such sites through both manual and automated methods. We work with a non-profit called StopBadware.org to come up with criteria for maintaining this list, and to provide simple processes for webmasters to remove their site from the list.

We periodically update that list and released one such update to the site this morning.Unfortunately (and here’s the human error), the URL of ‘/’ was mistakenly checked in as a value to the file and ‘/’ expands to all URLs. Fortunately, our on-call site reliability team found the problem quickly and reverted the file. Since we push these updates in a staggered and rolling fashion, the errors began appearing between 6:27 a.m. and 6:40 a.m. and began disappearing between 7:10 and 7:25 a.m., so the duration of the problem for any particular user was approximately 40 minutes.

Thanks to our team for their quick work in finding this. And again, our apologies to any of you who were inconvenienced this morning, and to site owners whose pages were incorrectly labelled. We will carefully investigate this incident and put more robust file checks in place to prevent it from happening again.

Thanks for your understanding.

Update at 10:29 am PST: This post was revised as more precise information became available (changes are in blue). Here’s StopBadware’s explanation.

Posted by Marissa Mayer, VP, Search Products & User Experience

Well done to Google for not only fixing the problem so quickly, but openly admitting to human error. Google is only human after all.

How Ads Can Screw Up Your SEO: Bloomberg.com Example

Not actually a major problem, although without stats on Goole site links usage, really hard to know!

Jul 28, 2009

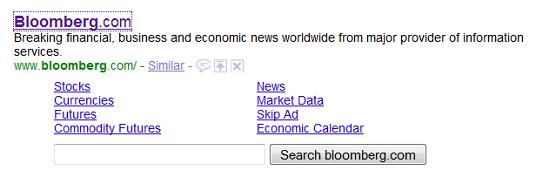

I love Bloomberg.com, it is possibly my favourite financial news website. Being the lazy person that I so often am though, I do sometimes use Google to navigate to the website, even though I am quite aware of how the address bar in the browser works. Today I spotted a strange sitelinks (sitelinks are the links to internal pages that Google sometimes shows on the search engine results page). There was one for “SKIP AD”

Here’s the proof:

However, we can learn something from this too. It has for years generally been considered bad practice to show full page adverts on your homepage, however, this does not appear to be the case, at least for Bloomberg. They still have a vast number of pages well listed in the search engines, and also a very relevant set of sitelinks and a search box below – something most webmasters aspire to!

So, are splash pages OK now? Can we use them without fear of penalty, so long as we provide an easy way to “SKIP AD”?

SEO Tip: Sitemap Protocol using robots.txt

Mar 25, 2008

OK, I have now been messing about with website for almost two years, which really means that I am still a novice in this field, although a very keen novice that hopes to soon move on to apprentice level. However, I have learnt a lot about SEO over the last couple of years. Mostly from the guys that used to hang out at Search Guild, plus from random readings around the net. In fact, only today I learnt a new SEO tip, which came straight from the horses mouth – the horse being Google UK, and the mouth being Chewy Trewhella, who was speaking to .net magazine.

His tip was concerning sitemaps. He was actually talking about how the major search engines are trying to agree a sitemap protocol, so that webmasters only need to build one sitemap file, rather than the many that are currently recommended by the different search engines. Although this sitemap protocol has yet to be agreed, he did mention that the robots.txt file can be used to tell the search engines where the sitemap is, and this is done with:

sitemap:url

How simple is that?

OK, I was going to go into detail about the various other SEO tips that I have learnt over the last couple of years, but now I think that deserves another post.